When you post about your work as a sex worker on Instagram, OnlyFans, or a forum, you’re not just sharing your life-you’re exercising free speech. But what happens when that post gets taken down? Or when a platform shuts down your account because they’re scared of legal trouble? The answer lies in a 27-year-old law called CDA 230, and it’s the reason most online platforms don’t get sued for what their users say-even when it involves sex work.

What Is CDA 230, Really?

CDA 230 stands for Section 230 of the Communications Decency Act of 1996. It’s not a law about censorship. It’s not a law about sex work. It’s a law that says: platforms aren’t legally responsible for what their users post.

Before CDA 230, online services faced lawsuits all the time. In 1995, a court ruled that CompuServe was liable because it didn’t remove offensive content fast enough. But in 1997, the same court looked at a different case-Prodigy Services-and decided that because Prodigy moderated content, it was a publisher and therefore legally responsible. That created a nightmare: if you moderated, you got sued. If you didn’t, you got sued. So platforms did the only logical thing-they stopped moderating entirely.

CDA 230 fixed that. It said: if you’re a platform, you can moderate content without becoming legally responsible for it. You can remove hate speech, scams, or explicit material-and still be protected. You can also choose to leave it up. Either way, you’re not the publisher. The user is.

Why Does This Matter for Sex Workers?

Sex workers rely on online platforms to find clients, share safety tips, and build community. Many use Instagram to post discreetly. Others use Patreon, OnlyFans, or specialized forums like Reddit’s r/sexwork. These platforms aren’t hosting illegal activity-they’re hosting speech.

But here’s the problem: platforms are terrified. They see words like “escort,” “massage,” or “companionship,” and they panic. They don’t know if they’re breaking state laws, federal laws, or international laws. So they ban accounts. They shadowban posts. They delete entire communities under pressure from law enforcement or payment processors like Stripe and PayPal.

CDA 230 should protect them from that fear. But it doesn’t stop them from acting out of caution. That’s the gap. The law says they’re not liable. But it doesn’t stop them from self-censoring because they’re scared of bad PR, lost revenue, or criminal investigations.

In 2018, Congress passed FOSTA-SESTA, which carved out an exception to CDA 230. It made platforms liable if they knowingly facilitated sex trafficking. But the law was written so broadly that it didn’t just target traffickers-it targeted consensual adult work. Websites like Backpage were shut down. Reddit banned r/prostitution. OnlyFans started requiring ID verification and banned “sexual services.”

That’s the irony: the law meant to protect victims ended up pushing sex workers offline, into more dangerous situations. Without online screening, many now meet clients in person with no way to verify identities or share location details with peers.

How CDA 230 Actually Works in Practice

Let’s say a sex worker posts: “I’m available for private sessions in Austin on Friday. $150/hr. No drugs, no violence.”

Under CDA 230:

- The platform (say, Instagram) isn’t liable if that post is legal in Texas.

- The platform isn’t liable if the post violates their own terms of service.

- The platform can delete the post without admitting it’s illegal.

- The platform can’t be sued just because someone used their service to arrange a legal transaction.

That last point is critical. In the U.S., selling sex is legal in some counties in Nevada. In most other states, it’s illegal-but the act of advertising it online isn’t necessarily a crime. CDA 230 protects platforms from being held responsible for the legality of what users say, even if that speech is borderline or controversial.

Think of it like a bookstore. If someone buys a book on how to hack a car from Amazon, Amazon isn’t charged with aiding theft. Why? Because they’re not the author. They’re the distributor. CDA 230 treats online platforms the same way.

What Happens When Platforms Ignore CDA 230?

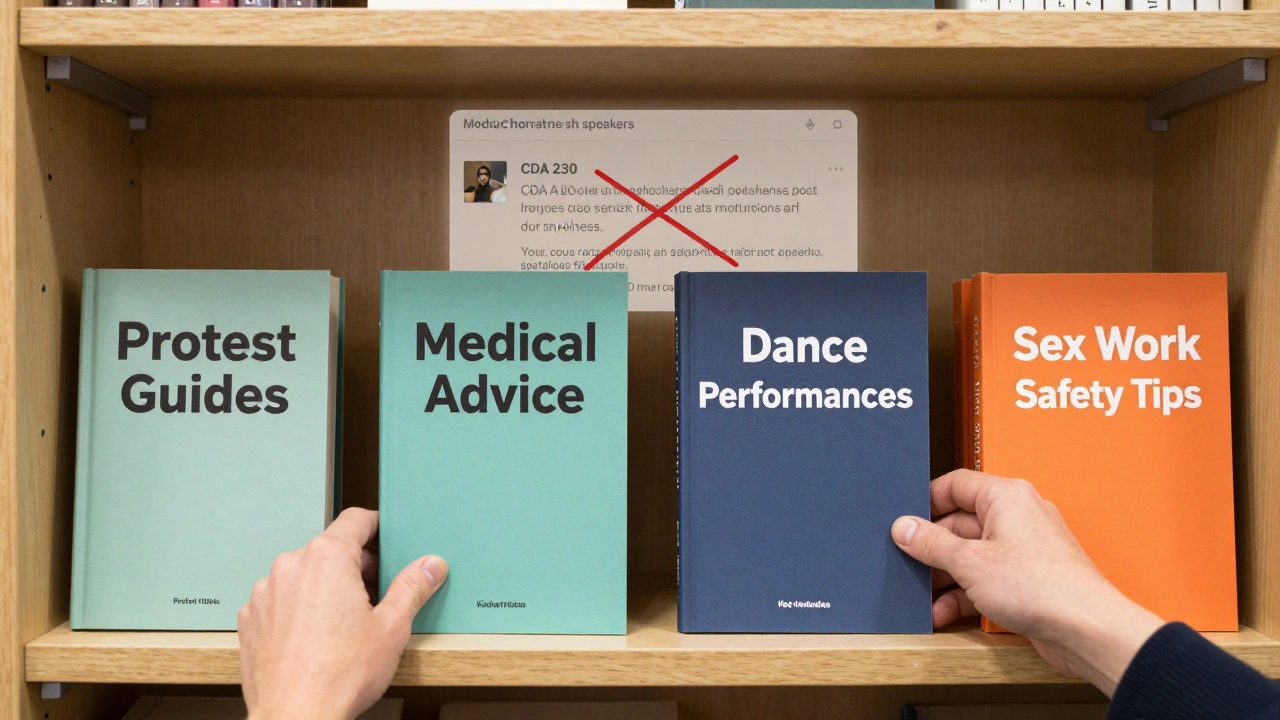

Many platforms act like they’re legally obligated to police speech-even when they’re not. Take OnlyFans. In 2021, they banned all content labeled as “sexual services,” even if it was just a dancer performing in a private setting with consent. They didn’t cite the law. They cited “community standards.”

That’s not CDA 230. That’s corporate fear.

Payment processors are another problem. Stripe, PayPal, and banks often freeze accounts linked to adult content-even when the content is legal. They don’t cite CDA 230. They cite their own risk policies. And because platforms rely on these services to get paid, they have no choice but to comply.

As a result, sex workers are forced into cash-only arrangements. They lose access to banking, tax reporting tools, and financial safety nets. Some move to decentralized platforms like Mastodon or peer-to-peer apps like Signal. But those tools don’t scale. They’re not built for visibility or income.

Who Benefits from CDA 230?

Everyone who uses the internet benefits-from activists in Iran posting about human rights, to LGBTQ+ teens finding support groups, to sex workers sharing safety checklists. Without CDA 230, platforms like Twitter, YouTube, and TikTok would either be full of illegal content or completely locked down.

Imagine if every time someone posted about a protest, a medical condition, or their job as a stripper, the platform had to verify the legality of every comment. It would be impossible. The cost would be astronomical. The result? Fewer platforms. Fewer voices. Fewer options for people who need them most.

Sex workers aren’t asking for special treatment. They’re asking to be treated like everyone else: as speakers, not criminals.

Is CDA 230 Under Threat?

Yes. And it’s not just politicians. Tech companies themselves are quietly lobbying to weaken it. Why? Because moderation is expensive. And if they can shift responsibility onto users-or get lawmakers to force them to censor more-they can reduce their legal risk.

In 2023, a bill in California tried to require platforms to prove they weren’t “facilitating” sex work by scanning all images for nudity. That’s not enforcement. That’s mass surveillance.

Meanwhile, the U.S. Supreme Court is considering cases that could redefine what “knowledge” means under FOSTA-SESTA. If the court rules that platforms are liable if they “should have known” about illegal content, it could kill CDA 230’s protection entirely.

That’s why advocacy groups like the Sex Workers Project and the Electronic Frontier Foundation are fighting to preserve it. They’re not defending predators. They’re defending the right of people to speak, earn, and survive online without being treated as criminals by default.

What Can Sex Workers Do?

Understand your rights. CDA 230 doesn’t protect you from arrest. But it does protect the platforms you use from being forced to delete your content just because it’s about sex work.

Use encrypted platforms where possible. Signal, Matrix, and decentralized networks like Mastodon give you more control. Don’t rely on platforms that can shut you down with a click.

Document everything. If your account is suspended without explanation, save screenshots, timestamps, and communication logs. You may not be able to sue the platform-but you can report it to digital rights organizations.

Support advocacy. Groups like the Global Network of Sex Work Projects and the Red Umbrella Fund are working to change laws and protect online speech. Don’t assume this is just your problem. It’s a free speech issue.

And remember: the law doesn’t say your speech is legal. It says the platform doesn’t have to be punished for hosting it. That’s a small but powerful distinction.

What’s Next?

CDA 230 won’t last forever. But it’s still the best shield we have. The real battle isn’t in courtrooms-it’s in public perception. Until society stops seeing sex work as inherently criminal, platforms will keep acting like they’re guilty until proven innocent.

Change won’t come from lawsuits. It’ll come from people speaking up. From clients understanding the difference between exploitation and consensual labor. From lawmakers realizing that criminalizing speech doesn’t protect anyone-it just pushes people into the dark.

Until then, CDA 230 remains the quiet guardian of online freedom-for sex workers, for activists, for everyone who dares to speak.